Pandas With Python

by Sachin Rathi in Apps/DevOps, General

Pandas (Python Data Analysis Library) is a data structures and analysis library. It’s used for analysis and data science. Other data science toolsets like SciPy, NumPy, and Matplotlib can create end-to-end analytic workflows to solve business problems.

One of the major issues with data analytics is missing or malformed or erroneous data. This “dirty” data can result in incomplete or (worse) incorrect analysis. To resolve this “dirty” data problem Panda provides a powerful library of functions to help you clean up, sort through, and make sense of your datasets, no matter what state they’re in.

So how do we clean Dirty Data using Pandas & Python?

Let’s go through some Pandas technique you can use to clean up your dirty data.

Let’s start

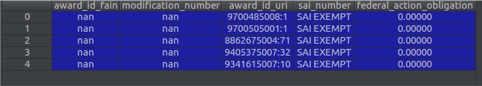

Look at your data

data.head()

Dealing the missing data

The most common problem is missing data. Blank or missing data typically ends up causing issues during data analysis. Below are a few ways of dealing with missing data using Pandas:

- Replace missing data with default value –

This replace the NaN to 0.

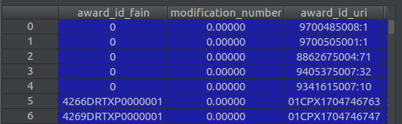

- Remove of the rows and columns that have missing data

data.dropna()

Remove all award_id_fain which have NA value

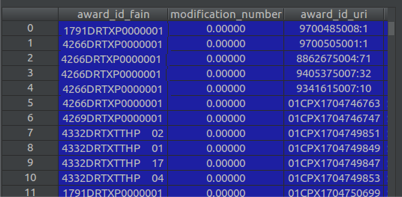

Dealing the error-prone columns

Pandas allows us to also operate upon column(s) instead of rows. This can be done by using the parameter axis=1 in our code.

- Drop the columns that have NA values:

data.dropna(axis=1, how=’all’)

- Drop all columns with any NA values:

data.dropna(axis=1, how=’any’)

Normalize data types

data = pd.read_csv(‘student_data.csv’, dtype={‘section’: int})

This tells Pandas that the column ‘section’ needs to be an integer value.

Save your results

After completed cleaning the data, if you may want to export it back into CSV format for further processing in another program. This is easy to do in Pandas:

data.to_csv(‘cleanfile.csv’ encoding=’utf-8’)